On 2 nodes in the Hetzner cloud, I want to create a cluster trying out kubespray. kubespray is ansible based…Under the hood: kubeadm kubespray does not create VMs, so I try it out on 2 cloud servers (using hetzner provider). See a discussion of the tools kubespray, kubeadm, kops here

Modifying the example from https://github.com/kubernetes-sigs/kubespray/blob/master/docs/setting-up-your-first-cluster.md or https://kubespray.io/#/docs/getting-started

#

chrisp:kubespray sandorm$ git clone https://github.com/kubernetes-sigs/kubespray.git

Cloning into 'kubespray'...

chrisp:kubespray sandorm$ git checkout release-2.21

branch 'release-2.21' set up to track 'origin/release-2.21'.

Switched to a new branch 'release-2.21'

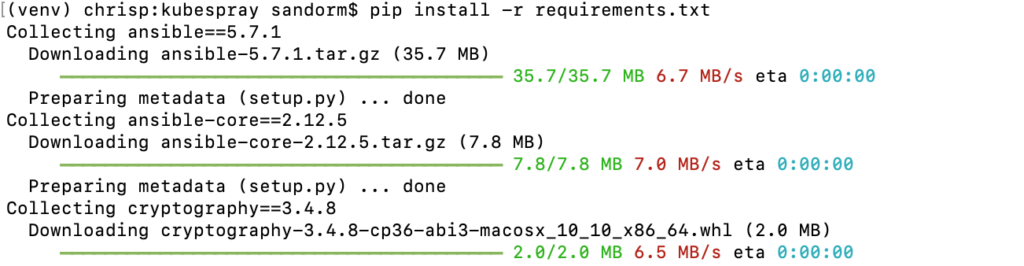

# in same check out dir, create venv folder

chrisp:kubespray sandorm$ python3 -m venv venv

chrisp:kubespray sandorm$ source venv/bin/activate

# upgrade pip

[notice] A new release of pip available: 22.3.1 -> 23.0.1

[notice] To update, run: pip install --upgrade pip

...

Successfully installed pip-23.0.1Now set the inventory with the ips of our server, using a helper inventory.py

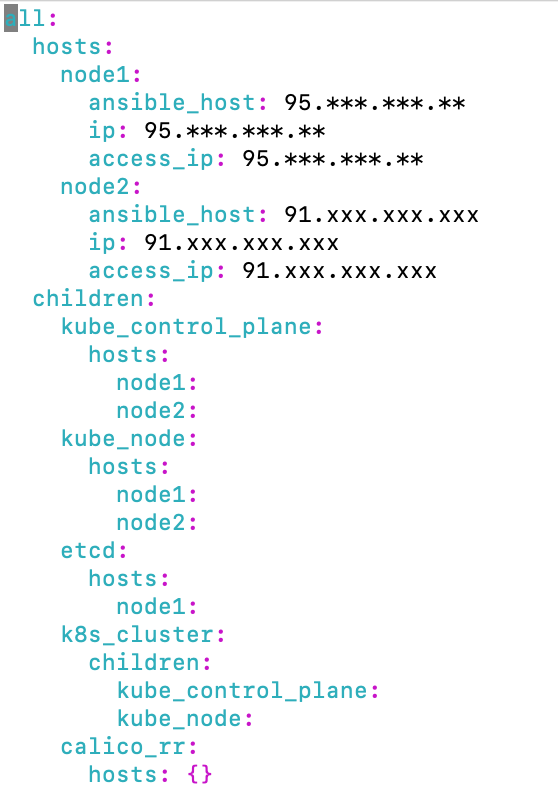

(venv) chrisp:kubespray sandorm$ declare -a IPS=(95.**.**.** 91.**.**.**)

(venv) chrisp:kubespray sandorm$ CONFIG_FILE=inventory/mycluster/hosts.yaml python3 contrib/inventory_builder/inventory.py ${IPS[@]}

DEBUG: Adding group all

DEBUG: Adding group kube_control_plane

DEBUG: Adding group kube_node

DEBUG: Adding group etcd

DEBUG: Adding group k8s_cluster

DEBUG: Adding group calico_rr

DEBUG: adding host node1 to group all

DEBUG: adding host node2 to group all

DEBUG: adding host node1 to group etcd

DEBUG: adding host node1 to group kube_control_plane

DEBUG: adding host node2 to group kube_control_plane

DEBUG: adding host node1 to group kube_node

DEBUG: adding host node2 to group kube_nodeWe have an inventory file now

The blog recommends removing the access_ip lines (used to define how other nodes access the node).

The main config has nearly 400 lines:

chrisp:kubespray sandorm$ wc -l kubespray/inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

382 kubespray/inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.ymlAs network plugin, I selected cilium. Now try the playbook

...

[WARNING]: Unable to parse /Volumes/x8crucial/Software/kubespray/kubespray/inventory/mycluster/hosts.yml as an inventory source

...

# nodes unreachable

fatal: [node2]: UNREACHABLE! =>

# use ansible ping

ansible -i inventory/mycluster/hosts.yml all -m ping -b

...

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @kris

# access only via root and need to fix changed host key

# create user like local user, set ssh access

ansible -i inventory/mycluster/hosts.yml -m ping all

[WARNING]: Skipping callback plugin 'ara_default', unable to load

node2 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

# get rid of ARA message by sourcing venv and

# see https://github.com/ansible-community/ara

pip install ansible ara[server]

Requirement already satisfied: ansible in ./venv/lib/python3.11/site-packages (5.7.1)

...

Requirement already satisfied: pycparser in ./venv/lib/python3.11/site-packages (from cffi>=1.12->cryptography->ansible-core~=2.12.5->ansible) (2.21)

Installing collected packages: pytz, whitenoise, tzdata, sqlparse, pygments, dynaconf, asgiref, pytz-deprecation-shim, Django, tzlocal, djangorestframework, django-health-check, django-filter, django-cors-headers

Successfully installed Django-3.2.18 asgiref-3.6.0 django-cors-headers-3.14.0 django-filter-22.1 django-health-check-3.17.0 djangorestframework-3.14.0 dynaconf-3.1.12 pygments-2.14.0 pytz-2022.7.1 pytz-deprecation-shim-0.1.0.post0 sqlparse-0.4.3 tzdata-2022.7 tzlocal-4.2 whitenoise-6.4.0

ansible -i inventory/mycluster/hosts.yml -m ping all

[WARNING]: Skipping callback plugin 'ara_default', unable to load

node2 | SUCCESS => {

"changed": false,

"ping": "pong"

}

node1 | SUCCESS => {

"changed": false,

"ping": "pong"

}

(venv) chrisp:kubespray sandorm$ git grep ,ara

ansible.cfg:callbacks_enabled = profile_tasks,ara_default

ansible --version

ansible [core 2.12.5]ARA (http://see https://github.com/ansible-community/ara) is a plugin for ansible report analysis…Disable for the moment, in ansible.cfg… or continue with the ARA documentation.

# get ara to work

export ANSIBLE_CALLBACK_PLUGINS=$(python3 -mara.setup.callback_plugins)

export ARA_LOG_LEVEL=DEBUG

ansible -i inventory/mycluster/hosts.yml -m ping all

echo $ANSIBLE_CALLBACK_PLUGINS

/Volumes/x8crucial/Software/kubespray/kubespray/venv/lib/python3.11/site-packages/ara/plugins/callbackAgain, start playbook… set sudo rights

#

fatal: [node2]: FAILED! => {"msg": "Missing sudo password"}

# allow my user (sandorm) to become root

cat /etc/sudoers.d/amelio

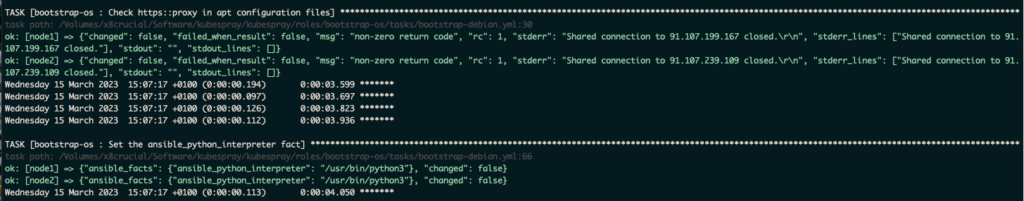

sandorm ALL=(ALL) NOPASSWD: ALLPlaybook starts, working on both nodes !

The playbook runs for a while. Watch for cilium download, nginx, coredns and more interesting stuff. nerdctl is a docker-compatible CLI for containerd…You will also see etcdctl and snapshots being taken,openssl config being done. Task [kubernetes/node : Modprobe nf_conntrack_ipv4] will fail, but is ignored. Same with TASK [kubernetes/control-plane : Check which kube-control nodes are already members of the cluster]

TASK [download : debug] **************************************************************************************************************************************************************************************

task path: /Volumes/x8crucial/Software/kubespray/kubespray/roles/download/tasks/download_container.yml:21

ok: [node1] => {

"msg": "Pull quay.io/cilium/cilium:v1.12.1 required is: True"

}

ok: [node2] => {

"msg": "Pull quay.io/cilium/cilium:v1.12.1 required is: True"

}

...

TASK [kubernetes/control-plane : Set kubeadm_discovery_address] **********************************************************************************************************************************************

task path: /Volumes/x8crucial/Software/kubespray/kubespray/roles/kubernetes/control-plane/tasks/kubeadm-secondary.yml:2

ok: [node1] => {"ansible_facts": {"kubeadm_discovery_address": "91.107.199.167:6443"}, "changed": false}

ok: [node2] => {"ansible_facts": {"kubeadm_discovery_address": "91.107.199.167:6443"}, "changed": false}

Wednesday 15 March 2023 17:00:46 +0100 (0:00:00.099) 0:13:04.508 *******

...

TASK [kubernetes-apps/cluster_roles : Kubernetes Apps | Wait for kube-apiserver] *****************************************************************************************************************************

task path: /Volumes/x8crucial/Software/kubespray/kubespray/roles/kubernetes-apps/cluster_roles/tasks/main.yml:2

ok: [node1] => {"attempts": 1, "audit_id": "cbd13cf8-eeca-4aae-bd2f-7851279eb7a2", "cache_control": "no-cache, private", "changed": false, "connection": "close", "content_length": "2", "content_type": "text/plain; charset=utf-8", "cookies": {}, "cookies_string": "", "date": "Wed, 15 Mar 2023 16:01:38 GMT", "elapsed": 0, "msg": "OK (2 bytes)", "redirected": false, "status": 200, "url": "https://127.0.0.1:6443/healthz", "x_content_type_options": "nosniff", "x_kubernetes_pf_flowschema_uid": "5ebfb960-386b-473a-b51a-4cdec708b7c2", "x_kubernetes_pf_prioritylevel_uid": "7f21700a-43d9-47bf-895a-0aba1a106418"}

Wednesday 15 March 2023 17:01:39 +0100 (0:00:01.810) 0:13:57.135 *******

...

TASK [network_plugin/cilium : Stop if cilium_version is < v1.10.0] *******************************************************************************************************************************************

task path: /Volumes/x8crucial/Software/kubespray/kubespray/roles/network_plugin/cilium/tasks/check.yml:51

ok: [node1] => {

"changed": false,

"msg": "All assertions passed"

}

ok: [node2] => {

"changed": false,

"msg": "All assertions passed"

}

...

TASK [kubernetes-apps/ansible : Kubernetes Apps | Lay Down CoreDNS templates]

...

RUNNING HANDLER [kubernetes/preinstall : Preinstall | wait for the apiserver to be running]

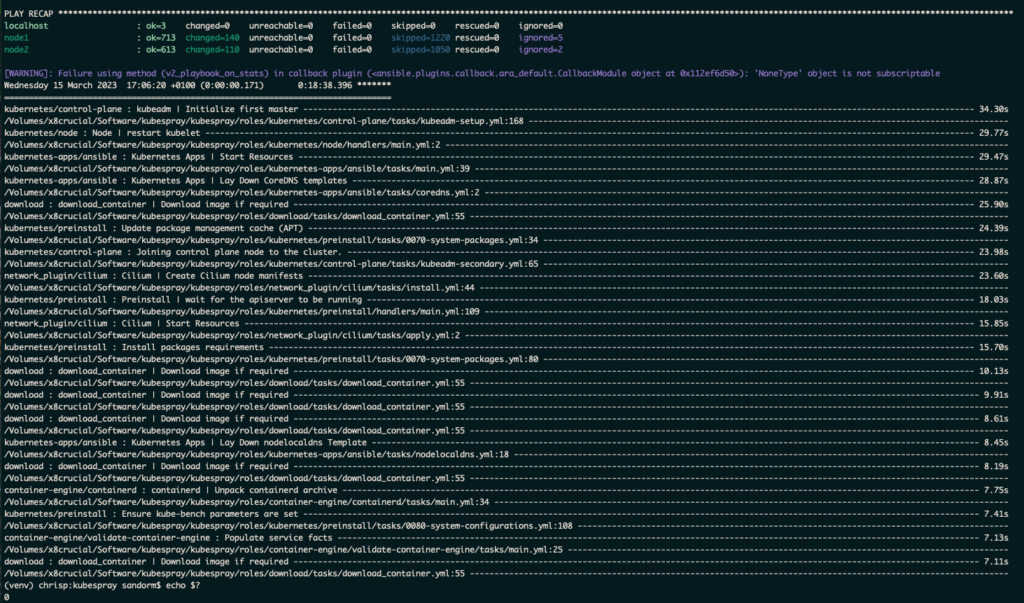

If all went well, we see a summary of the playbook:

How to access the cluster ? Set KUBECONFIG to the downloaded admin.conf config file (but replace localhost:6443 with <nodeip>:6443

(venv) chrisp:kubespray sandorm$ nvim kubespray.hetzner.conf

(venv) chrisp:kubespray sandorm$ export KUBECONFIG=./kubespray.hetzner.conf

(venv) chrisp:kubespray sandorm$ k get nodes

NAME STATUS ROLES AGE VERSION

node1 Ready control-plane 14m v1.25.6

node2 Ready control-plane 14m v1.25.6

(venv) chrisp:kubespray sandorm$ scp root@$HETZNER1:/etc/kubernetes/admin.conf kubespray.hetzner.conf

(venv) chrisp:kubespray sandorm$ cat $HOME/(kubespray.hetzner.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJek1ETXhOVEUyTURBeE1Wb1hEVE16TURNeE1qRTJNREF4TVZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTWpDClRsdFp4VnFzcDVwNEVTa3h5ZXdibTJwcVJIcjB0L3VpbVhWa24yV2FSS2tuSE1Gc3dnMG5ZM1FOOHdHOTFnVGoKVm1WbHRSMjd3U2g3R01XTmwvTXdKUEhDQnBCV25YMzRNcks2dUpuMXhYYzFwVDJNNnFOUGJBaWUva1hvZ2Z4Swp1NGxtT2JoUm5Tb0toazM1THJ3UDcyRDhDcFB0R2ZYdExEOE52RGlpTlEzRjRQZWNsU21peTQxbjdodldtK3FmClkrOGk1NWNPZ053OGtiYm40cmlVcHBCaFc0K3lXYUM2bkdtVG5EOW0xS2N6UFJUby93ZEt5eENMUnRvOHZiaVEKNzgvN0RqZTY0aGRya3lUYW02a2tERm9GcVBBcytnY2FpREdBNGUyQnRISXlIT0xxOVFub0ErczBkRlZpMis1UQp0U2dYd2JUT0Q3L2N4WGl4WWc4Q0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZNeU4rRmg3eWhGK1dmM2MySEcxdkhESkhBQWVNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBTEN5dGRtY2hvdGM5bHpBaVVSawpaYllCQlkyRmFQM1dEamEwcSsyYmFZUEdYcFdNTElUZStKcVFsZWFqL2N3bDRvYWNneU1sTVkxYXVDV2JnTzFMCjBzOEQxWGMxNzRONUZZK3dpamVRcGFwYUphM2REU1NNZkw2OXhLaU9VVjBtTjBMamYwdkxXYjNBd1drUjJMR3gKazlUeHpiSXVKaktzbFFwc1lBckNtNy9LanZ2bXlxV0ZCeTE4dE9WQURPdW4wNTJuMkpQQ0lEV3RWNGZ3MzB4aQp4NG5iRnFJeW5FNUZKeFBTbFoyWng0bTFMY1NYTHZqczhRODBvSkZJcU1GNk4veHV0bUNaV1I4bENVa2I1Wi9uCkJPalluWGJUMmJiOGp1NHB4UGVyN1I4RUJnbkZmSG41T0ZWMlR4a0hQMEp3VzhrMml5a1gwYlBNWVJqdFhEdTQKQnRrPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://91.XXX.XXX.XXX:6443

name: cluster.local

contexts:

- context:

cluster: cluster.local

user: kubernetes-admin

name: kubernetes-admin@cluster.local

current-context: kubernetes-admin@cluster.local

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJRnZWeVRaaU0zTTR3RFFZSktvWklodm...

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdE52SEVlNDJpTVBEbUVuczZiSkduYW1DTkhjSno2eUxwaW9XRGRCYk5zeThDKzE0ClA0NFM0Y1d4Wmp2OTgyMjVsNFhtaERFcUFYaExQNUlZdEZ5UVpJTFN3RkNS...Check some information

# alias k=kubectl

(venv) chrisp:kubespray sandorm$ k version --short

Client Version: v1.25.2

Kustomize Version: v4.5.7

Server Version: v1.25.6

k version --output=yaml

clientVersion:

buildDate: "2022-09-21T14:33:49Z"

compiler: gc

gitCommit: 5835544ca568b757a8ecae5c153f317e5736700e

gitTreeState: clean

gitVersion: v1.25.2

goVersion: go1.19.1

major: "1"

minor: "25"

platform: darwin/amd64

kustomizeVersion: v4.5.7

serverVersion:

buildDate: "2023-01-18T19:15:26Z"

compiler: gc

gitCommit: ff2c119726cc1f8926fb0585c74b25921e866a28

gitTreeState: clean

gitVersion: v1.25.6

goVersion: go1.19.5

major: "1"

minor: "25"

platform: linux/amd64

(venv) chrisp:kubespray sandorm$ k get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system cilium-2nf28 1/1 Running 0 19m

kube-system cilium-bmkwg 1/1 Running 0 19m

kube-system cilium-operator-79cf96547-gbvc2 1/1 Running 0 19m

kube-system cilium-operator-79cf96547-vzftv 1/1 Running 0 19m

kube-system coredns-588bb58b94-2cfm6 1/1 Running 0 17m

kube-system coredns-588bb58b94-p4f2z 1/1 Running 0 15m

kube-system dns-autoscaler-5b9959d7fc-wgtbr 1/1 Running 0 17m

kube-system kube-apiserver-node1 1/1 Running 1 21m

kube-system kube-apiserver-node2 1/1 Running 1 21m

kube-system kube-controller-manager-node1 1/1 Running 1 21m

kube-system kube-controller-manager-node2 1/1 Running 1 21m

kube-system kube-proxy-j2fsc 1/1 Running 0 20m

kube-system kube-proxy-wxk8j 1/1 Running 0 20m

kube-system kube-scheduler-node1 1/1 Running 1 21m

kube-system kube-scheduler-node2 1/1 Running 1 21m

kube-system nodelocaldns-fdvn4 1/1 Running 0 17m

kube-system nodelocaldns-khxtl 1/1 Running 0 17m

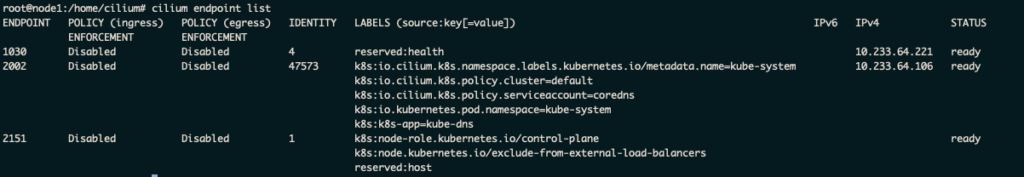

Check cilium health on a cilium pod.

(venv) chrisp:kubespray sandorm$ k exec -it pod/cilium-2nf28 -n kube-system -- bash

Defaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), apply-sysctl-overwrites (init), clean-cilium-state (init)

root@node1:/home/cilium# cilium status

KVStore: Ok etcd: 1/1 connected, lease-ID=5f3386e5fdb71a9e, lock lease-ID=5f3386e5fdb71aa0, has-quorum=true: https://91.107.199.167:2379 - 3.5.6 (Leader)

Kubernetes: Ok 1.25 (v1.25.6) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumNetworkPolicy", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Probe [eth0 91.107.199.167]

Host firewall: Disabled

CNI Chaining: none

Cilium: Ok 1.12.1 (v1.12.1-4c9a630)

NodeMonitor: Disabled

Cilium health daemon: Ok

IPAM: IPv4: 3/254 allocated from 10.233.64.0/24,

BandwidthManager: Disabled

Host Routing: Legacy

Masquerading: IPTables [IPv4: Enabled, IPv6: Disabled]

Controller Status: 31/31 healthy

Proxy Status: OK, ip 10.233.64.151, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Disabled

Encryption: Disabled

Cluster health: 2/2 reachable (2023-03-15T16:23:14Z)

Cilium has many sub commands, about health and endpoints…